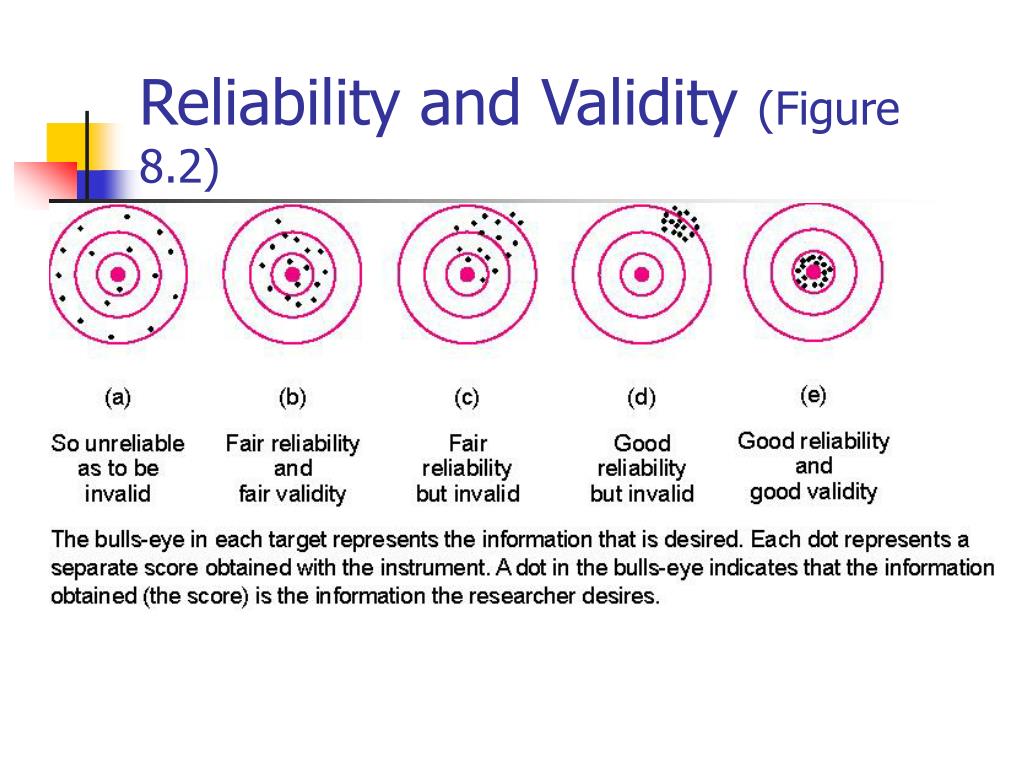

Performing an item analysis of multiple choice questions.Constructing a table of specifications prior to developing exams.Examining whether rubrics have extraneous content or whether important content is missing.Perhaps the most relevant to assessment is content validity, or the extent to which the content of the assessment instrument matches the SLOs. Validity is often thought of as having different forms. In order to be valid, a measurement must also and first be reliable. More specifically, it refers to the extent to which inferences made from an assessment tool are appropriate, meaningful, and useful (American Psychological Association and the National Council on Measurement in Education). Validity is the extent to which a measurement tool measures what it is supposed to. Increase the number of questions on a multiple choice exam that address the same learning outcome.Recalculate interrater reliability until consistency is achieved. Conducting norming sessions to help raters use rubrics more consistently.Interrater reliability = number of agreements/number of possible agreements. Testing rubrics and calculating an interrater reliability coefficient.

Instead, be mindful of your assessment’s limitations, but go forward with implementing improvement plans.

While you should try to take steps to improve the reliability and validity of your assessment, you should not become paralyzed in your ability to draw conclusions from your assessment results and continuously focus your efforts on redeveloping your assessment instruments rather than using the results to try and improve student learning. Reliability and validity are important concepts in assessment, however, the demands for reliability and validity in SLO assessment are not usually as rigorous as in research.

0 kommentar(er)

0 kommentar(er)